Setting up Redis for Production EnvironmentPublished on Aug 20, 2013

We all know this. You get something to work on your own development machine, but don’t really feel confident enough to do the same on a production box. In development, you can always wipe out everything and start over. You cannot do the same in production.

Fortunately, Redis has always been a nice service to deal with. There are, however, a few things you need to take care of when your website should depend on Redis. You want to make sure everything is right, starting from the installation process to a proper backup mechanism.

Here are a few tips for setting up Redis from the very beginning to get you going. The assumption is that you have a clean machine running Ubuntu Server 12.04 or newer.

Installation

The current best practice is to use a configuration management tool and treat our setup as code. We are very happy users of Puppet ourselves (Chef is also very popular), but in this case, let’s just keep things simple and use our system’s packaging system. We can revisit our setup in a future post.

The first thing we want to do is to add a reliable source of the latest stable release of Redis (2.6.14 at the moment). To do that, we can just add a PPA from our hero Chris Lea and install the redis-server package:

$ sudo add-apt-repository ppa:chris-lea/redis-server

$ sudo apt-get update

$ sudo apt-get install redis-server

If everything went well, you should now have a running Redis server. The package also installed a simple init.d script so we can start, restart and stop as usual. Also we don’t have to worry about starting Redis ourselves after reboot.

We can also do a quick check by running the Redis Benchmark. It will not only stress test Redis itself but also verify that there’s nothing wrong with our installation. The following will issue 100.000 requests from 50 clients sending 12 commands at the same time.

$ redis-benchmark -q -n 100000 -c 50 -P 12

Configuration

Redis comes with a very reasonable and heavily commented configuration file. Take a look at the conf file at /etc/redis/redis.conf and walk through.

One of the most important setting you need to be aware of are the persistent options. Redis provides three: RDB, AOF and both.

The RDB option represents point-in-time snapshots of the current dataset. A snapshot is represented as a regular file located at /var/lib/redis called dump.rdb (by default). Redis regularly dumps its dataset to the file, making it the perfect candidate for backups.

You can change how often Redis creates these snapshots with the save statement in the configuration file. To dump the dataset every 15 minutes (900 seconds) if at least one key changed, you can say:

save 900 1

You can have more than one of these statements to make your snapshotting more granular. Note that there are already a few of these statements in the configuration file.

Now what happens if your machine crashes before it has a chance to save the newest dataset? Your data get lost. Sometimes is not a big deal - you might be using Redis as a cache storage and you simply don’t mind losing its data. If, however, the data is important to you and you want to make sure they are safe, you should consider AOF.

AOF (append only file) works as log of all incoming write operations. Redis instantly writes to the log file so even if your machine crashes, it can still recover and have the latest data. Similar to RDB, AOF log is represented as a regular file at var/lib/redis called appendonly.aof (by default).

appendonly yes

The problem with this though is that the operating system maintains an output buffer and a successful write doesn’t necessarily mean that the data gets written (flushed) to the disk immediately. To tell OS to really really write the data to the disk, Redis needs to call the fsync() function right after the write call, which can be slow. That’s why Redis provides 3 options, from the docs:

- no: don’t fsync, just let the OS flush the data when it wants. Faster.

- always: fsync after every write to the append only log . Slow, Safest.

- everysec: fsync only one time every second. Compromise.

The everysec option is the default and it’s sufficient for most installations:

appendfsync everysec

As mentioned in the beginning, you can safely use both RDB and AOF at the same time, I would recommend it for every non cache usage.

A few more notes:

- Think twice before binding Redis to something other than 127.0.0.1 (localhost). Redis is usually an internal service that doesn’t need to be exposed. If you have multiple servers that need to access the Redis instance, feel free to change the option.

- Consider setting a password.

- Consider disabling (renaming to an empty string) certain possibly harmful commands. Candidates are FLUSHALL (remove all keys from all databases), FLUSHDB (similar, but from the current database), CONFIG (reconfiguring server at runtime) and SHUTDOWN.

Do not forget to restart Redis after you make any configuration changes:

$ sudo service redis-server restart

One last thing is to set the vm.overcommit_memory variable to 1. This kernel setting affects how memory allocation is handled when a parent forks a child that starts to change the shared copy-on-write memory page. Without this setting, Redis might fail when dumping its dataset to disk. You can find more information about this at the FAQ. Edit /etc/sysctl.conf, add the line and reboot the box:

vm.overcommit_memory = 1

Monitoring

Everything seems to work fine now but we want to make sure it stays that way. One way to do that is to setup a monitoring service that watches other processes and is able to react if they crash or otherwise misbehave. Our tool of choice for the job is Monit (there’s plenty of others). The installation process is again very simple:

$ sudo apt-get install monit

The configuration file (/etc/monit/monitrc) is again very heavily commented and no significant changes are required. One thing we want to do, however, is to enable Monit’s internal web server so we can query status of services from terminal and be able to see its web interface. Add the following at the bottom of monirc (/etc/monit/monitrc):

set httpd port 8081 and

use address localhost # only accept connection from localhost

allow localhost # allow localhost to connect to the server and

allow admin:monit # require user "admin" with password "monit"

Next, we need to tell Monit to monitor Redis. To do that, just create a file at /etc/monit/conf.d/redis.conf with the following:

check process redis-server with pidfile "/var/run/redis/redis-server.pid"

start program = "/etc/init.d/redis-server start"

stop program = "/etc/init.d/redis-server stop"

if failed host 127.0.0.1 port 6379 then restart

if 5 restarts within 5 cycles then timeout

The file is pretty self explanatory, just note that a cycle refers to the polling interval (defaults to 120 seconds). There’s a lot you can add here such as custom alerts when CPU or RAM exceeds certain boundaries. Take a look at Monit’s documentation for all the possibilities.

To make Monit aware of these changes, reload its configuration:

$ sudo service monit reload

Now we should be able to query Monit for service statuses:

$ sudo monit status

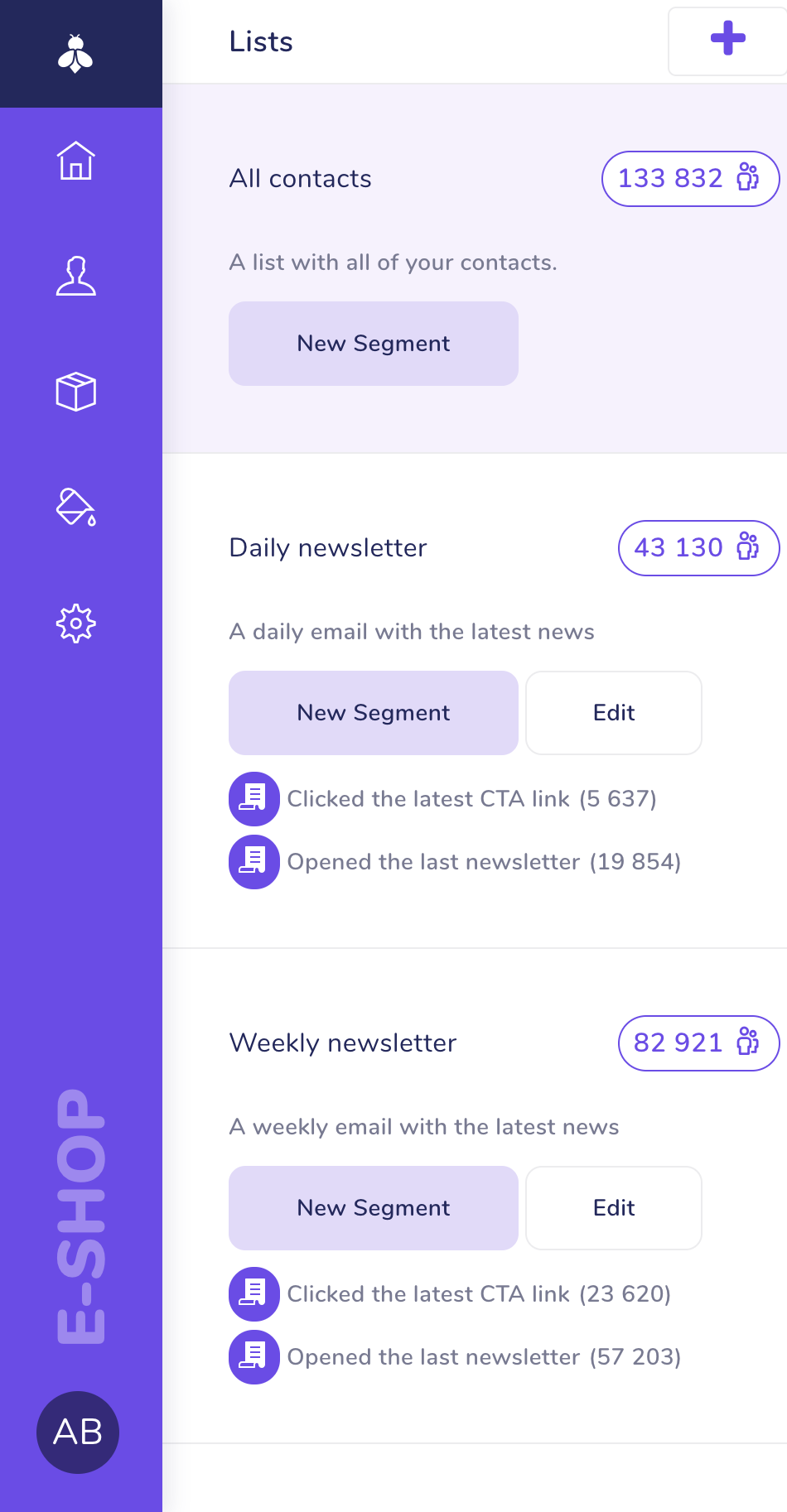

Or we can visit http://localhost:8081, login with admin/monit, select redis-server and see an output similar to this:

Note that if you are working with a remote system via SSH, you can’t directly access the remote box’s localhost URL. A solution to this is to simply forward port from the remote machine to your local machine:

ssh -L 8081:localhost:8081 your_user@your_remote_machine -f -N

Now you can access http://localhost:8081 and it will be as if you would issue the request from the remote machine.

Finally, let’s not forget about the log file located at /var/log/redis/redis-server.log as it’s usually the first place to look if there’s something wrong (Redis won’t start for example).

Backup & Restore

As explained in the Configuration section, Redis provides RDB snapshots that are perfect for making backups. The RDB file contains every single piece of data you have stored and so you can safely backup just the RDB file and be able to restore if an accident happens. To make a backup, copy the RDB file:

cp /var/lib/redis/dump.rdb /somewhere/safe/dump.$(date +%Y%m%d%H%M).rdb

Simple as that. There’s no need to prepare the server, you can even do this while the server is still running because Redis dumps to a temporary file and then atomically renames to the final one, keeping the previous file available until you finish copying. As a security precaution, you should consider encrypting the file before you upload it to an external storage. A reasonable approach to automatize the process is via a cron task.

Now let’s imagine there’s been an accident (e.g. someone deleted some data) and we want to restore a previous version of our dataset from these RDB backups. The procedure depends on whether you have your Redis server configured to use AOF mode or not.

RDB only, without AOF

Without AOF, the restore process is really simple. First, make sure that nobody is working with or is connected to the Redis server. Next, take it down with Monit’s stop command (otherwise Monit would start the service automatically):

$ sudo monit stop redis-server

If you are not using a monitoring service, you can simply:

$ sudo service redis-server stop

Next, we want to delete the current dataset snapshot (dump.rdb by default).

$ cd /var/lib/redis

$ sudo rm -f dump.rdb

Now there’s no snapshot file, so we need to copy the backup one back in place:

$ sudo cp /backup/dump.rdb .

$ sudo chown redis:redis dump.rdb

Notice the second line, we need to make sure that the snapshot is owned by the same user as the Redis server is running as. Now, we can just start the Redis server and we are done.

$ sudo monit start redis-server

RDB + AOF together

With AOF, the process is a little bit more involved. When Redis starts, it looks at the AOF log as the primary source of information because it has always the newest data available. The problem is that if we only have a RDB snapshot and no AOF log (or we have a log but it has been affected by the accident so we cannot use it), Redis would still use the AOF log as the only data source. And since the log is missing (same as empty), it would not load any data at all and create a new empty snapshot file.

The following solution takes advantage of the fact that you can recreate the AOF log from the current dataset even if the AOF mode is currently disabled.

Similar to the RDB only, without AOF section, take down the server, delete any snapshots and logs and finally copy the backup dump.rdb file.

$ sudo rm -f dump.rdb appendonly.aof

$ sudo cp /backup/dump.rdb .

$ sudo chown redis:redis dump.rdb

Now the interesting part. Go to the configuration file (/etc/redis/redis.conf) and disable AOF:

appendonly no

Next, start the Redis server and issue the BGREWRITEAOF command:

$ sudo monit start redis-server

$ redis-cli BGREWRITEAOF

It might take some time, you can check the progress by looking at the aof_rewrite_in_progress value from the info command (0 - done, 1 - not yet):

$ redis-cli info | grep aof_rewrite_in_progress

Once it’s done, you should see a brand new appendonly.aof file. Next, stop the server, turn the AOF back on and start the server again.

Now you might be asking why we don’t just backup the AOF log. The answer is that you could but the AOF logs tend to get really large and we don’t usually want to waste disk space.

That’s all for a basic Redis setup. You should be good to go. There are plenty of things you can do to make the setup more robust, e.g. slave replication, but I would like to address that in a separate post as it’s a big topic.

We are very interested in your own tips & tricks. Please share in the comments so we can all learn something new.

Written by Jiri Pospisil of sensible.io.Do you manage email campaigns for your business?

We're building a tool to help businesses reach out to their customers more easily. It's called SendingBee and it's going to be awesome.

Who we are

This is the blog of sensible.io, a web consultancy company providing expertise in Ruby and Javascript.

Recent articles

- SSH Tunnel - Local and Remote Port Forwarding Explained With Examples

- Supercharge your VIM into IDE with CTags

- Don't just dump code into your models

- Getting started with Ember App Kit

- 4 Tips for Working with Dates in PostgreSQL

- PostgreSQL Sequences and Array Column Types

- Setting up Redis for Production Environment

- PostgreSQL Basics by Example

- Strong Parameters by Example

- Ember Model - Introduction

Tags

- rails (1)

- ruby (3)

- osx (1)

- vim (1)

- ember (3)

- ember-model (1)

- postgresql (3)

- ruby vim ctags (1)

- unix (1)

- redis (1)

- promises (1)